Deployment with Containers

Deployment Overview

See the Deployment Overview chapter for a brief outline of jiffy-application deployment artifacts

All-In-One Deployment with Docker

In a development or test setting it may make sense to deploy everything in one container. SQLite is a good choice for this type of container deployment, as it is a file-based database and therefore does not need to be started as a service. Containers typically offer single services and their configuration is geared to this usage pattern. While it is possible to run Postgres, a KVS and a jiffy-application in the same container, it is an atypical scenario and a little cumbersome to setup. By using SQLite and dispensing with the KVS we only need to run the jiffy-application in the container.

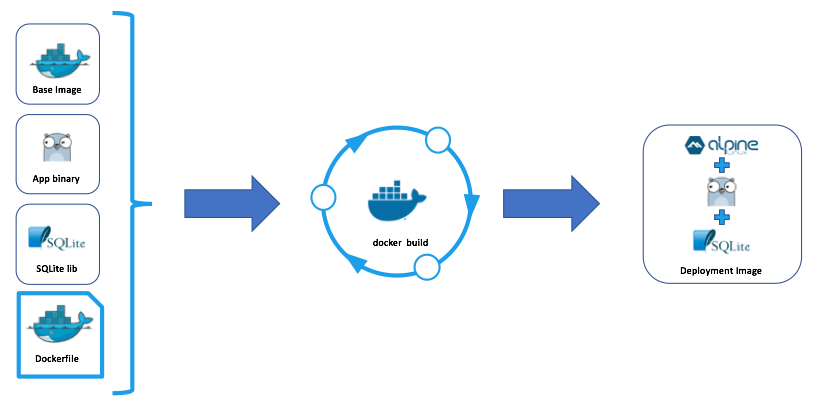

The docker build command can be used in conjunction with a Dockerfile to generate a new Docker image complete with the application, SQLite library and sparse Alpine Linux install. docker build also assigns an ipv4 address to the container image by default. This ip-address needs to be added to the application’s configuration file via the execution of a custom script during the image build.

See the Jiffy with Docker and SQLite tutorial for an example of an end-to-end all-in-one Jiffy application deployment with Docker and SQLite.

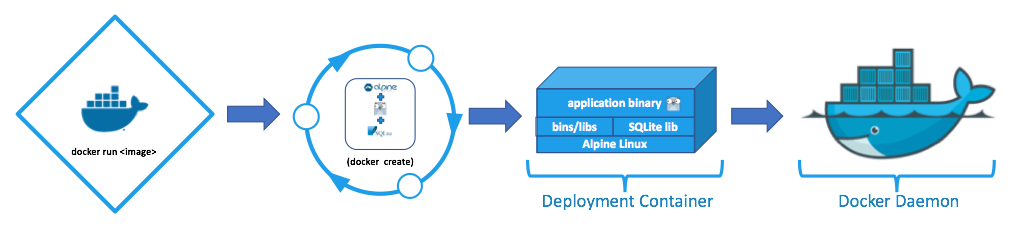

The new Docker image can then be used to create a container which the Docker daemon will then manage and run.

See the sample tutorial referenced above for the Dockerfile, application configuration file and scripting required to support this type of deployment.

Single-Instance Deployment with Docker

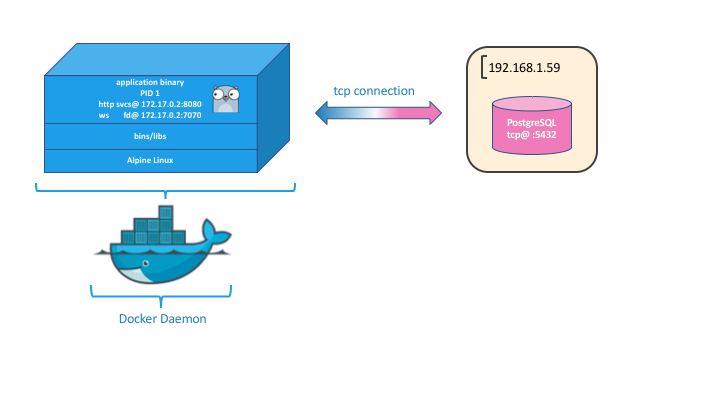

Single instance deployments contain one jiffy-application instance, an optional KVS and a database. In this example scenario the jiffy application is deployed in a Docker container, the database is running on a separate host and the stand-alone/local KVS is used. Ip-addresses are shown for illustrative purposes only and it is implied that Docker’s default bridge network is used. See the All-In-One Deployment with Docker section for a brief overview of the Docker containerization of a jiffy application.

The docker build command can be used in conjunction with a Dockerfile to generate a new Docker image containing the application and a sparse Alpine Linux install. docker build also assigns a static ipv4 address to the container image by default. This ip-address needs to be added to the application’s configuration file via the execution of a custom script during the image build.

See the Jiffy with Docker and External PostgreSQL tutorial for an example of an end-to-end deployment of a single jiffy application container talking to a PostgreSQL database on the host’s network.

The Single-Instance Deployment diagram shows a jiffy-application instance running in a container with an ipv4 address of 172.17.0.2. The container is using docker’s default bridge network, as evidenced by the first two numbers of the ip-address. The application instance is accepting http client connections on port :8080 and using port :7070 to listen for failure-detector & cache synchronization messages. The failure detector ‘bus’ is used for group-leadership and inter-process cache messages, even when the application is run standalone (single process).

It is not necessary to run an external KVS if only one container/jiffy-application instance will be run. When running without a KVS, set the ‘local_standalone’ KVS to active in the xxx.config.json file. The application instance will assign itself a PID of 1 and assert leadership inside it’s own process. The short version is: don’t worry about the KVS if you want to run a single instance of your jiffy application.

If you do choose to run an All-In-One / single application instance with a KVS, using sluggo / setting the ‘sluggo’ KVS to active in the xxx.config.json file is the easiest way to get started. You may also choose to use Redis, Memcached or create your own implementation of the gmcom.GetterSetter interface to support another KVS.

The application communicates with the KVS using whatever protocol/transport is required. Remember that the selection of KVS is arbitrary and an interface is provided to allow the implementer to write their own code to communicate with any KVS.

The application is also shown to be accessing a Postgres database on the host’s network via tcp @192.168.1.31:5432. In this example we show PostgreSQL accepting connections on it’s default port (tcp/5432), but any of the supported databases can be used.

Multi-Instance Deployment with Docker

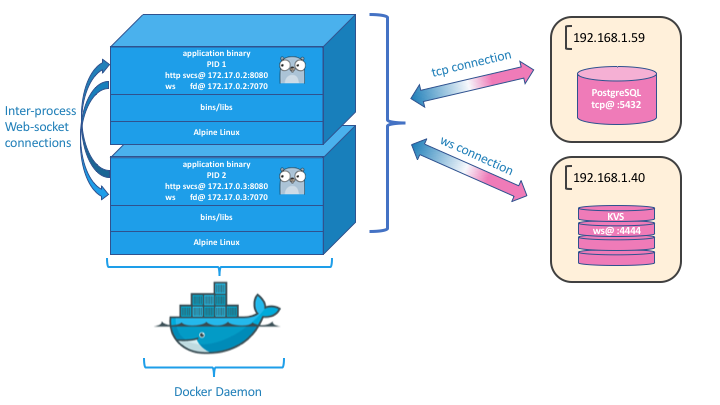

Multi-instance deployments refer to non-swarm Docker deployments of >1 jiffy-application containers, a KVS and a database. In this scenario the database and KVS are running on separate systems on the host network. When deploying more than one instance of a jiffy-application the KVS is not optional, as the group-leadership sub-system requires it in order to globally persist the group-leader information. It would be possible to run the KVS and the database in Docker containers as well, but we will start by only running instances of the containerized jiffy-application in Docker.

The Multi-Instance Deployment diagram shows two instances of a containerized jiffy application running in Docker. Each application instance is accepting http client connections on port :8080 and using port :7070 to listen for failure-detector & cache synchronization messages. The failure detector ‘bus’ is used for group-leadership and inter-process cache messages.

The KVS is used to hold the current group-leader information when jiffy applications are deployed with more than one instance. See the Interprocess Communication section for details regarding the use of the KVS with group-membership and group-leader election.

Running sluggo is the easiest way to get started with a KVS for jiffy. You may also choose to use an existing Redis or Memcached system or cluster.

The application communicates with the KVS using whatever protocol/transport is required. The diagram shows a web-socket connection from the containerized jiffy application to the KVS on port :4444, as this is how sluggo communicates. Remember that the selection of KVS is arbitrary and an interface is provided to allow the implementer to write their own code to communicate with any KVS.

Application instances are shown to be accessing a Postgres database on the host network via tcp @192.168.1.59:5432. In this example we show PostgreSQL accepting connections on it’s default port (tcp/5432), but any of the supported databases can be used.

When running multiple jiffy-application instances a load-balancer of some sort should be used to route traffic based on end-point, system-load or other locally important criteria.